R -q -e 'dir.create(Sys.getenv("R_LIBS_USER"),rec=T)'

R CMD INSTALL Rhpc_0.yy-yday.tar.gz

R -q -e 'dir.create(Sys.getenv("R_LIBS_USER"),rec=T)'

mpiexec -n 1 /usr/local1/R-3.0.1.gcc.gotoblas/bin/R CMD INSTALL Rhpc_0.yy-yday.tar.gz \

--configure-args='--with-mpicc=gcc43

--with-mpi-cflags=-I/opt/FJSVplang/include64/mpi/fujitsu

--with-mpi-ldflags="-lmpi_f -lfjgmp64 -L/opt/FJSVpnple/lib -ljrm -L/opt/FJSVpnidt/lib -lfjidt -L/opt/FJSVplang/lib64 -lfj90i -lfj90f -lelf -lm -lpthread -lrt -ldl"'

> library(Rhpc) > Rhpc_initialize() > cl<-Rhpc_getHandle(2) > Rhpc_worker_call(cl,Sys.getpid) [[1]] [1] 10571 [[2]] [1] 10572

$ R -q

> library(Rhpc)

> Rhpc_initialize()

> cl<-Rhpc_getHandle(4)

> Rhpc_worker_call(cl,Sys.getpid)

[[1]]

[1] 10571

[[2]]

[1] 10572

[[3]]

[1] 10573

[[4]]

[1] 10574

> Rhpc_finalize()

> q("no")

# this file name is test.R library(Rhpc) Rhpc_initialize() cl<-Rhpc_getHandle() Rhpc_lapply(cl, 1:10000, sqrt) Rhpc_finalize()command Line:

mpiexec -n PROCNUM ~/R/x86_64-unknown-linux-gnu-library/3.0/Rhpc/Rhpc CMD BATCH -q --no-save test.R

| Name | Last modified | Size | Description | |

|---|---|---|---|---|

| Parent Directory | - | |||

| Documents/ | 2015-06-26 15:26 | - | ||

| RhpcBLASctl_0.23-42.tar.gz | 2023-02-11 09:17 | 41K | - Control the number of threads on BLAS for R | |

| Rhpc_0.21-247.tar.gz | 2021-09-05 07:09 | 66K | - permits *apply() style dispatch for HPC(source) | |

| examples/ | 2016-01-13 20:27 | - | ||

| old/ | 2023-02-10 20:53 | - | ||

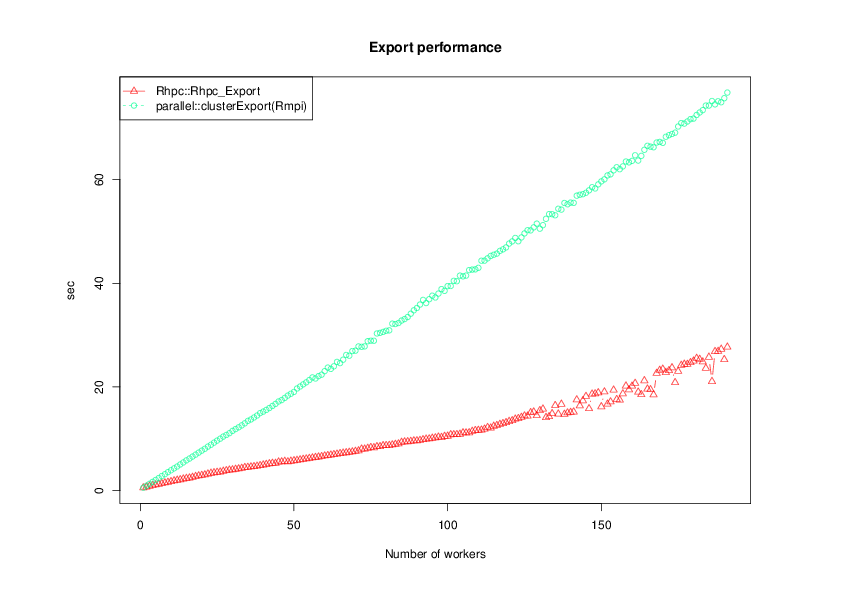

library(Rhpc) Rhpc_initialize() cl<-Rhpc_getHandle() set.seed(123) N<-4e3 Rhpc_numberOfWorker(cl) M<-matrix(runif(N^2),N,N) system.time(Rhpc_Export(cl,"M")) f<-function()sum(M) all.equal(rep(sum(M),Rhpc_numberOfWorker(cl)),unlist(Rhpc_worker_call(cl,f))) Rhpc_finalize()

library(parallel) library(Rmpi) cl<-makeMPIcluster() set.seed(123) N<-4e3 length(cl) M<-matrix(runif(N^2),N,N) system.time(clusterExport(cl,"M")) f<-function()sum(M) all.equal(rep(sum(M),length(cl)),unlist(clusterCall(cl,f))) stopCluster(cl)

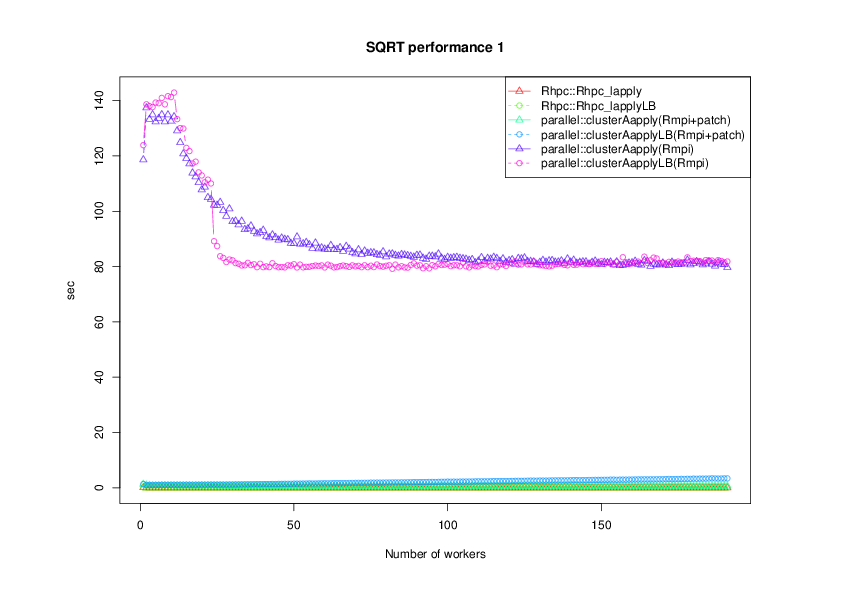

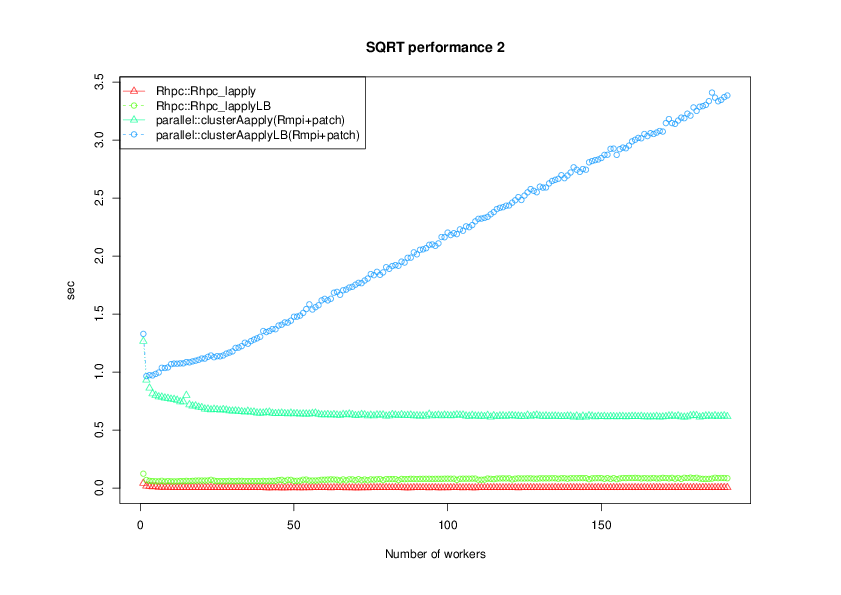

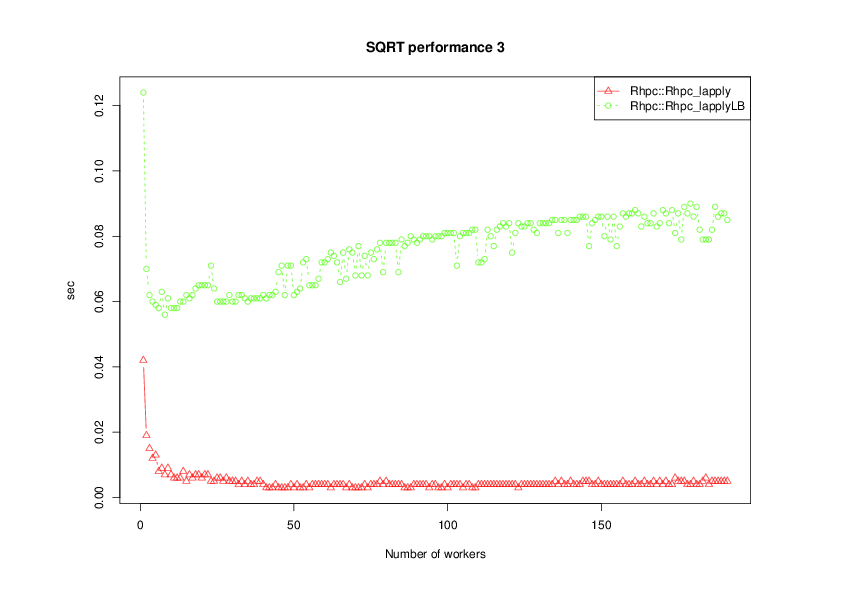

library(Rhpc) Rhpc_initialize() cl<-Rhpc_getHandle() system.time(ans<-Rhpc_lapply(cl, 1:10000, sqrt)) all.equal(sqrt(1:10000),unlist(ans)) Rhpc_finalize()

library(Rhpc) Rhpc_initialize() cl<-Rhpc_getHandle() system.time(ans<-Rhpc_lapplyLB(cl, 1:10000, sqrt)) all.equal(sqrt(1:10000),unlist(ans)) Rhpc_finalize()

library(parallel) library(Rmpi) cl<-makeMPIcluster() system.time(ans<-clusterApply(cl,1:10000,sqrt)) all.equal(sqrt(1:10000),unlist(ans)) stopCluster(cl)

library(parallel) library(Rmpi) cl<-makeMPIcluster() system.time(ans<-clusterApplyLB(cl,1:10000,sqrt)) all.equal(sqrt(1:10000),unlist(ans)) stopCluster(cl)